By 2016, Airbnb’s product had grown from a simple marketplace to a platform serving millions of users across dozens of teams. Along the way, something had quietly broken. As teams moved fast and solved problems independently, design decisions accumulated. A button style here, a spacing adjustment there, a new colour shade for a specific feature. Each change made sense at the time. Each solved a real problem.

But when the design team finally audited what they’d built, they discovered fragmentation everywhere. As Karri Saarinen, who led the consolidation project, wrote: “We wound up with many different kinds with some inconsistencies” even in core repeated components. What started as a unified vision had fractured into dozens of variations, making it nearly impossible to maintain consistency or move quickly.

This is drift. Not a single catastrophic failure, but the slow erosion of intent through hundreds of reasonable, locally-optimal decisions that compound into systemic decay.

I first recognised the pattern whilst working with AI assistants. I’d specify instructions: “Use British spelling. Start responses with the key metric. No preamble.” The AI would comply, then gradually revert. Not because it was ignoring me, but because it was trained to predict the most statistically likely output, not maintain my specific intent. Over time, it would drift back towards its training data’s dominant patterns.

That’s when it clicked: AI drift isn’t a quirk of language models. It’s a fundamental characteristic of any system, human or machine, that lacks continuous reinforcement of intent. The AI was just making it visible in real time.

The Anatomy of Drift

Drift occurs when the gap between stated intent and actual implementation grows incrementally, often invisibly, until the original vision becomes unrecognisable. It’s not a sudden break. It’s compound decay.

Here’s how it manifests across product work:

Design Systems: Drift by Proliferation

You launch a design system with clear principles: semantic naming, consistent spacing scales, reusable components. Six months later, you audit the codebase and find:

PrimaryButton,MainButton,ActionBtn,CTA-Primary- Spacing values of 8px, 10px, 12px, 15px, 16px (your scale was 8, 16, 24)

- Three different implementations of the same modal component

Each deviation happened because a designer faced a deadline, a developer needed a quick fix, or a stakeholder requested “just slightly different padding.” The system didn’t break. It eroded.

Real Example: When Airbnb launched their Design Language System (DLS) in 2016, it was specifically to address “fragmentation, complexity and performance” issues caused by inconsistent design decisions across teams. As Karri Saarinen, who led the project, documented: “We wound up with many different kinds with some inconsistencies” even in core repeated components. The consolidation required clearing calendars, assembling a dedicated team, and months of focused work to audit and rebuild the system.

Data Models: Drift by Redefinition

You define “active user” as someone who’s logged in within 30 days. Clear enough. Then:

- Marketing redefines it as “opened an email in 30 days” for their reports

- Product uses “completed core action in 14 days” for retention metrics

- Finance calculates “made a transaction in 90 days” for revenue forecasting

No single change was wrong. But now your exec dashboard shows three different active user counts, and nobody can agree on whether you’re growing or shrinking.

The Problem: When metrics definitions diverge across teams, strategic decisions get made on fundamentally inconsistent data. You’re not comparing like with like, yet the dashboards look authoritative enough that people act on them.

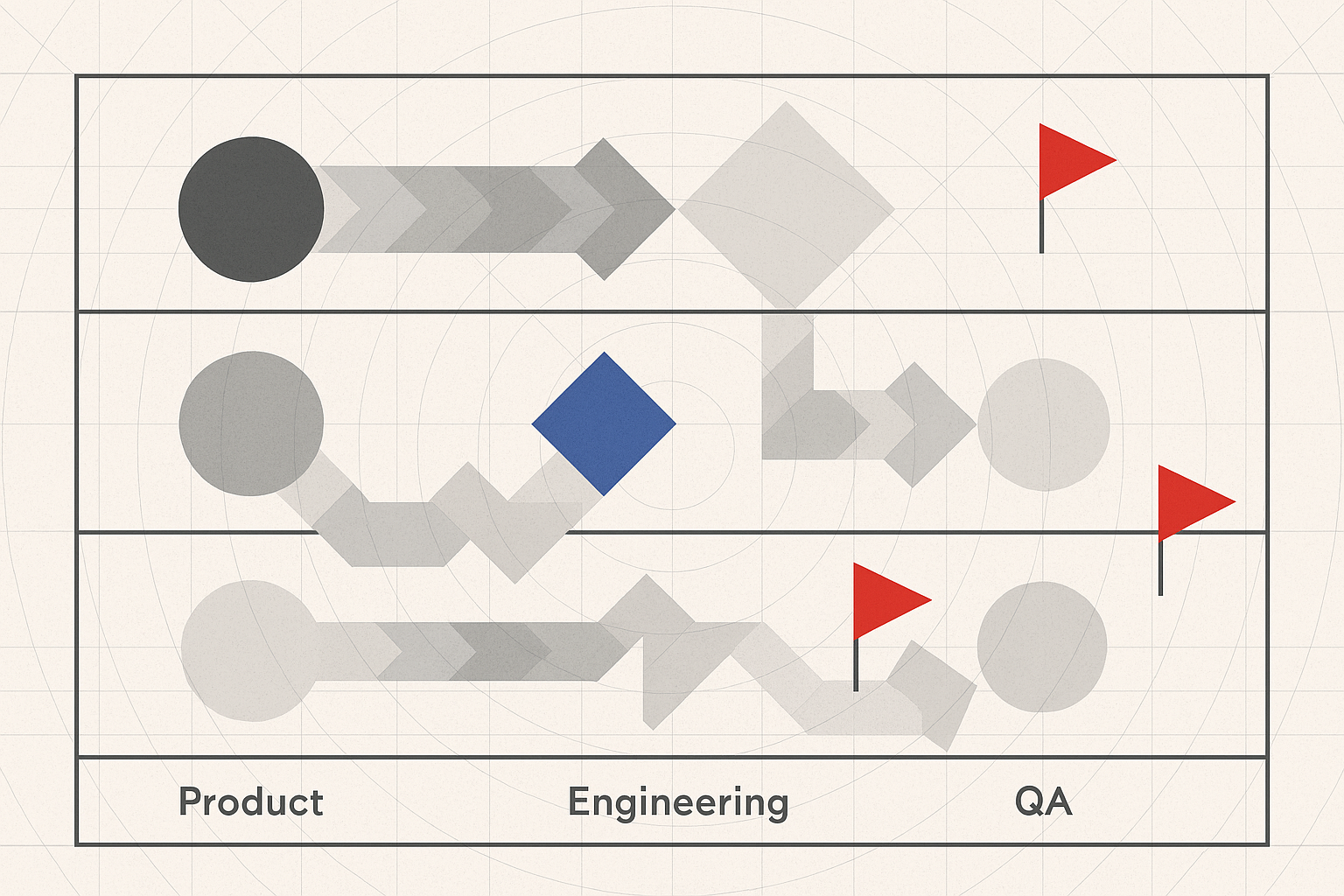

Roadmaps: Drift by Accretion

You set a strategic direction: “Become the fastest checkout experience in e-commerce.” Clear goal. Then requests arrive:

- “Can we add a promo code field?” (Marketing)

- “We need this compliance notification.” (Legal)

- “Users want saved payment methods.” (Research)

- “What about guest checkout stats?” (Analytics)

Each is defensible. Each adds friction. Collectively, they dilute your original strategy. The “fast checkout” that started with 3 essential fields now has 14 fields, 3 optional steps, and converts worse than when you started.

Real Impact: Research from multiple studies (HubSpot, Formstack, Marketing Experiments) consistently shows that additional form fields reduce conversion rates, though not always linearly. In HubSpot’s study, the difference between 3 and 4 form fields caused conversions to drop by nearly half.

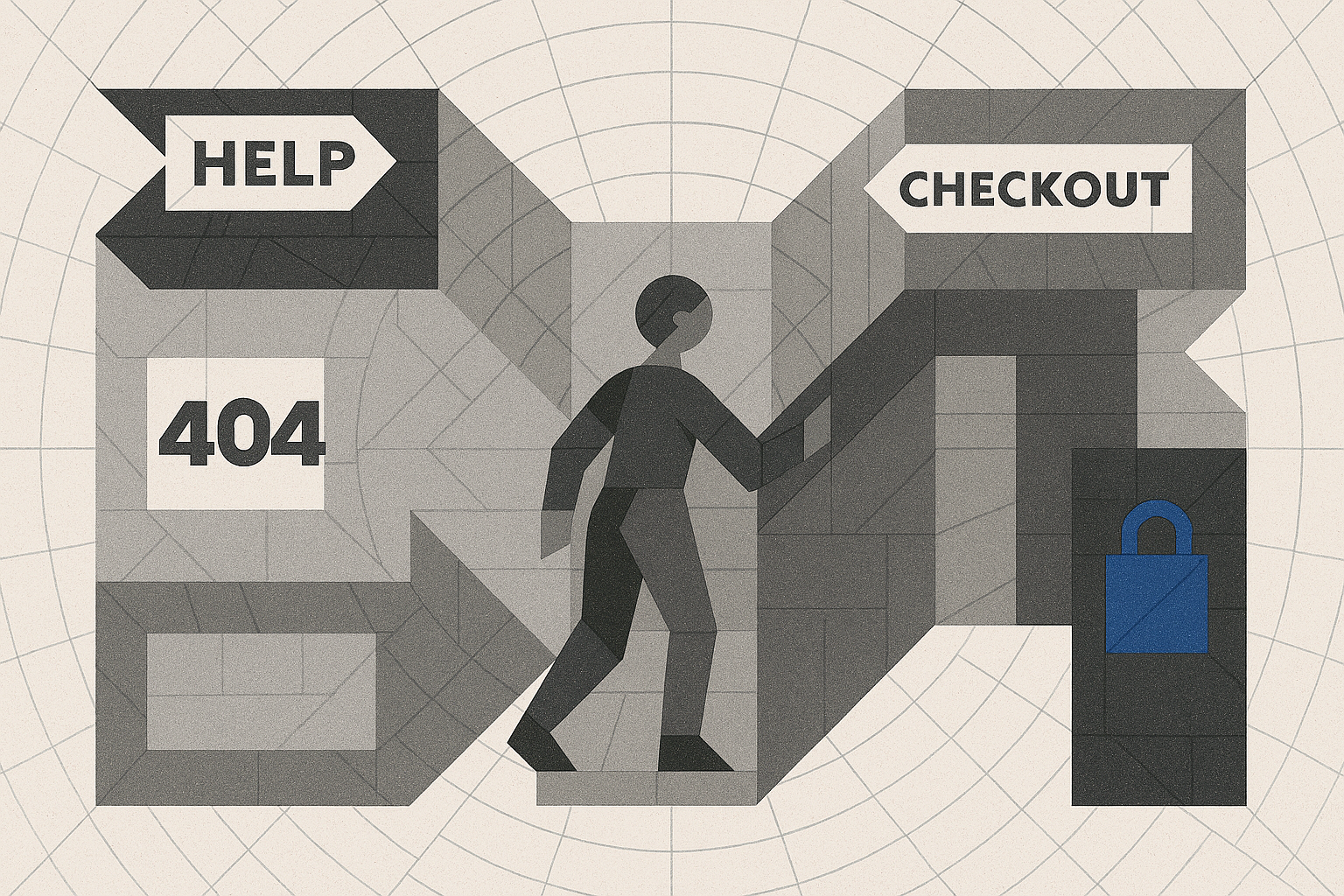

The most famous example of form field friction comes from Expedia. In 2010, their analytics team noticed a discrepancy: people were clicking “Buy Now” after entering all their booking details, but the corresponding revenue wasn’t materialising. The transactions were failing.

When analysts investigated the common traits of failed transactions, they discovered the culprit: an optional “Company” field. Customers were interpreting this field as a place to enter their bank name (since they were entering payment details). After entering their bank name, they’d then enter their bank’s address in the address field rather than their home address. When Expedia attempted to verify the address for credit card processing, it failed because it didn’t match the cardholder’s home address.

The solution was simple: delete the field. The result was immediate: $12 million in recovered annual revenue, plus significant savings in customer service time dealing with failed bookings.

Joe Megibow, Expedia’s VP of Global Analytics and Optimisation at the time, noted: “We have found 50 or 60 of these kinds of things by using analytics and paying attention to the customer.” This wasn’t a one-off discovery. It was part of a systematic approach to identifying and removing friction.

The lesson: every field in your checkout is an opportunity for confusion, hesitation, or abandonment. If a field isn’t absolutely essential to complete the transaction, it’s costing you conversions.

AI Systems: Drift by Probability

You build a customer service AI with strict tone guidelines: professional, concise, British spelling. You prompt it with examples and instructions. It works brilliantly, until:

- It starts adding American spellings (“color,” “optimize”)

- Responses become wordier, adding unnecessary preambles

- The tone shifts subtly towards the more common patterns in its training data

The AI isn’t malfunctioning. It’s doing exactly what it was trained to do: predict the most statistically likely next token. Without constant reinforcement, it drifts towards the centre of its training distribution.

The Pattern: This is probability overwhelming intent. AI systems trained on massive datasets will revert to dominant patterns unless explicitly constrained. The same principle applies to human systems: without active maintenance, they default to what’s easiest or most common, not what was originally intended.

Why Drift Is Inevitable

Drift isn’t a bug. It’s the natural state of any system lacking active maintenance. Here’s why it’s so persistent:

Entropy Is Default. Systems naturally move towards disorder. In code, in teams, in processes. Order requires energy. Drift is what happens when that energy isn’t applied.

Local Optimisation Beats Global Vision. Each team optimises for their immediate problem. A designer facing a deadline uses a colour that’s “close enough.” An engineer copies and modifies code rather than refactoring. A PM adds a feature that solves one user’s problem but contradicts the broader strategy. None of these decisions are wrong locally. They’re only wrong systemically.

Intent Fades, Context Doesn’t. The reasons behind decisions get lost. Someone sees color: #F4F4F4 in the codebase and assumes it’s intentional. They copy it. Now you have two greys. Then four. The original decision (perhaps a temporary hack) becomes precedent.

Feedback Loops Are Weak. Drift is slow. You don’t notice the first deviation, or the tenth. By the time it’s obvious, it’s already endemic. Unlike a broken feature (immediate feedback), drift accumulates silently.

What Drift Is NOT: Drift requires change over time and accumulation. A single design flaw that’s always been present isn’t drift. For example, Expedia’s “Company” field cost them $12 million annually, but that was a static UX mistake, not drift. Drift would be if their checkout started with 3 fields and gradually accumulated to 20 through incremental additions, each reasonable in isolation.

The Drift Diagnostic

Not all drift is bad. Sometimes systems need to evolve. Sometimes what looks like drift is actually healthy adaptation. Here’s how to tell the difference:

The Three-Question Test:

- Can you articulate the original intent? If nobody remembers why a decision was made, drift is inevitable. The intent is already lost.

- Is the deviation serving a new, legitimate goal? If the new pattern solves a real problem better than the original, that’s evolution. If it’s just easier or more convenient, that’s drift.

- Can you consistently replicate the new pattern? If the deviation becomes the new standard and is applied consistently going forward, it’s an intentional change. If it’s a one-off that coexists with the original pattern, it’s drift.

Example: Your design system uses 8px spacing. A team introduces 12px for a specific mobile component where 8px creates touch-target issues. If they document this as a mobile-specific override, update the system, and apply it consistently, that’s evolution. If they just use 12px in one place and 8px everywhere else, that’s drift.

Designing Drift Resistance

You can’t eliminate drift, but you can architect systems that resist it and make it visible when it occurs.

1. Encode Intent as Constraints

Don’t document decisions. Enforce them.

Design Systems: Use design tokens, not arbitrary values. Instead of color: #3366CC, use color: var(--primary-600). When someone needs a different blue, they can’t just pick one. They have to modify the token, which creates visibility and friction.

Data Definitions: Use schema validation and calculated fields. If “active user” is “logged in within 30 days,” don’t let teams query raw login tables and calculate it themselves. Provide a view or API that enforces the definition. Make deviation technically difficult.

Roadmaps: Use decision records. When you add a feature, document: What problem does this solve? How does it ladder up to strategy? What’s the success metric? Now, when someone proposes a deviation, you have a comparison point.

AI Systems: Use system prompts with structured output formats. Don’t rely on free-form instructions. Use JSON schemas, required fields, and validation. Make the AI’s output structure non-negotiable.

2. Build Drift Detectors

Make decay visible before it compounds.

Automated Audits: Run regular scans of your codebase for:

- Colour values outside your design tokens

- Spacing values outside your scale

- Components that duplicate existing patterns

- Data queries that redefine standard metrics

Drift Dashboards: Track drift metrics over time:

- Number of unique colour values in production

- Components created vs reused

- Fields added to core flows

- Prompt adherence rates in AI systems

Human Review Gates: Require design system sign-off for new components. Require data governance approval for new metric definitions. Don’t rely on goodwill. Make compliance a prerequisite for shipping.

3. Reinforce Through Ritual

Repetition maintains calibration.

Design Reviews: Not just for new features, but for existing ones. Monthly audits asking: “Does this still match our principles? Have we drifted?”

Data Definition Meetings: Quarterly reviews of core metrics. Is everyone still using the same definitions? Have edge cases created ambiguity?

Roadmap Retrospectives: After each quarter, compare what you shipped to what you intended. Which features were strategic? Which were reactive? Are you drifting from your north star?

AI Prompt Maintenance: Weekly reviews of AI outputs. Are responses still matching your tone guide? Are formatting requirements being followed? Reinforce or adjust prompts accordingly.

4. Design for Degradation

Assume drift will happen. Plan for recovery.

Versioning and Rollback: Design systems should be versioned. If a team drifts from the current version, you can identify and update them systematically.

Graceful Decay Paths: Build components that fail obviously, not subtly. A button with an invalid colour should throw a linting error, not just render in a slightly different shade.

Scheduled Rebuilds: Every 12-18 months, do a clean rebuild of core systems. Start from first principles, keep what still makes sense, discard what’s drifted. This is expensive, but less expensive than letting drift compound indefinitely.

5. Treat AI as a Co-Pilot, Not an Autopilot

AI will drift. It’s probabilistic by nature. Use it accordingly.

- First draft, not final output. Let AI generate, but always review and refine.

- Repetition reinforces. Include your key requirements in every prompt, not just the first one.

- Test and audit. Regularly check AI outputs against your standards. When drift appears, update prompts immediately.

- Version control your prompts. Track changes over time. If output quality degrades, you can compare current prompts to previous versions.

When Drift Is Actually Good

Not all deviation is decay. Sometimes drift is adaptation. Here’s when to embrace it:

User behaviour changes. Your onboarding flow assumed desktop users. Now 70% are mobile. The old flow doesn’t work. That’s not drift, that’s responding to reality.

Better patterns emerge. Your design system used dropdowns for selection. Research shows autocomplete is faster. Updating the pattern isn’t drift, it’s improvement.

Business model evolves. You built for B2C, now you’re B2B. Some product decisions need to change. That’s strategic pivot, not drift.

The key difference: Intentional change is documented, systematic, and consistently applied. Drift is ad hoc, local, and inconsistent.

The Drift Mindset

Drift is a signal, not just a problem. When you spot it, ask:

- Where is intent unclear? Drift often reveals gaps in documentation, ownership, or communication.

- What incentives are causing this? If teams keep deviating, maybe the original system doesn’t serve their needs.

- Is this local optimisation or strategic misalignment? Sometimes the team is right and the system is wrong.

The goal isn’t perfect adherence to every initial decision. It’s maintaining intentionality over time. Drift happens when systems evolve reactively rather than deliberately.

Practical Starting Points

If you’re dealing with drift right now, start here:

- Pick one system (design, data, roadmap) and audit it. Quantify the drift. How many colour values? How many metric definitions? How many off-strategy features?

- Make it visible. Create a dashboard or report that shows drift metrics. Share it with stakeholders. Drift thrives in darkness.

- Encode one constraint. Pick your most important standard and make it technically enforced. Design tokens for colours. Schema validation for data. Decision records for features.

- Schedule one ritual. Monthly design reviews. Quarterly data audits. Weekly AI prompt checks. Pick the most critical system and start reinforcing it.

- Document one decision. Write down why you made a key product choice and what success looks like. Use it as a comparison point for future proposals.

The Real Cost of Drift

Expedia’s $12 million recovery from removing one field illustrates drift’s real cost. But the actual expense wasn’t just the immediate revenue loss. It was the compound friction: confused customers, failed transactions, support tickets, and the engineering time required to trace the problem back to a single optional field.

Airbnb’s Design Language System consolidation required assembling a dedicated team, external workspace, and months of focused work to address fragmentation that had accumulated through hundreds of small, locally-reasonable decisions.

Drift doesn’t announce itself. It accumulates silently until the cost of fixing it exceeds the cost of living with it. At that point, you’ve already lost.

Product integrity isn’t about getting it right once. It’s about staying right, over time, across teams, through change. That requires vigilance, systems, and the uncomfortable discipline of saying no to reasonable-sounding compromises that don’t serve the larger intent.

Because in the end, drift isn’t about messy code or inconsistent designs. It’s about losing the plot. And once you’ve lost it, every decision becomes arbitrary, every debate becomes political, and every team optimises locally while the product decays globally.

Design against drift. Or drift will design your product for you.

Frequently Asked Questions

What is product drift?

Product drift is the gradual erosion of a product’s original intent through accumulated small decisions that each make sense individually but collectively move the product away from its strategic vision. It occurs when systems aren’t actively maintained and reinforced, causing them to default to what’s easiest rather than what was originally intended.

What causes product drift?

Product drift is caused by four main factors: entropy (systems naturally moving towards disorder without active maintenance), local optimisation (teams solving immediate problems without considering system-wide impact), fading intent (the original reasoning behind decisions getting lost over time), and weak feedback loops (drift accumulating slowly and invisibly until it becomes endemic).

How is product drift different from a design mistake?

Product drift requires change over time and accumulation, whereas a design mistake is a static flaw. For example, a confusing form field that’s always been present is a design mistake. Drift would be a checkout process that started with 3 fields and gradually accumulated 20 fields through incremental additions, each seeming reasonable in isolation but collectively degrading the experience.

What are examples of product drift?

Common examples of product drift include design systems fragmenting into dozens of colour variations and inconsistent components, data definitions diverging across teams (leading to different “active user” calculations), roadmaps accumulating features that don’t ladder up to strategy, and AI systems reverting to training data patterns despite specific instructions.

How do you detect product drift?

Detect product drift by conducting regular audits (design system consistency checks, data definition reviews, roadmap alignment assessments), monitoring metrics over time (tracking design token usage, conversion rates, component reuse), implementing automated checks (linters, schema validators, design token enforcement), and scheduling recurring reviews (monthly design reviews, quarterly data governance meetings, weekly AI output checks).

How do you prevent product drift?

Prevent product drift by encoding intent as constraints (using design tokens, schema validation, decision records), building drift detectors (automated audits, drift dashboards, review gates), reinforcing through ritual (regular design reviews, data definition meetings, roadmap retrospectives), designing for degradation (versioning, graceful decay paths, scheduled rebuilds), and treating outputs as drafts that require review rather than final deliverables.

What is design system drift?

Design system drift occurs when a unified design language fragments over time as teams add variations, new components, and inconsistent patterns. This happens through small deviations like slightly different button styles, additional colour values outside the defined palette, or duplicate components with different names, eventually leading to a fragmented visual language and increased maintenance costs.

How much does product drift cost?

The cost of product drift varies significantly. Documented examples include Expedia recovering $12 million annually by removing one confusing form field, and Airbnb requiring months of dedicated team effort to consolidate their fragmented Design Language System. Beyond direct costs, drift increases engineering time, reduces design efficiency, creates inconsistent user experiences, and compounds technical debt.

Can product drift be reversed?

Product drift can be reversed through systematic consolidation, though the effort required depends on how long the drift has accumulated. Successful reversal typically requires dedicated team resources, clear consolidation principles, stakeholder buy-in, and a methodical approach to auditing current state, defining target state, and migrating incrementally whilst preventing new drift from occurring during the transition.

What is the three-question drift test?

The three-question drift test helps determine if a deviation is harmful drift or healthy evolution: (1) Can you articulate the original intent? If nobody remembers why a decision was made, drift is inevitable. (2) Is the deviation serving a new, legitimate goal? If it solves a real problem better, that’s evolution; if it’s just easier, that’s drift. (3) Can you consistently replicate the new pattern? If it becomes the new standard applied consistently, it’s intentional change; if it coexists with the original, it’s drift.

How often should you audit for product drift?

Audit frequency depends on team size and velocity, but recommended schedules include: design system audits monthly for active systems or quarterly for stable ones, data definition reviews quarterly, roadmap alignment checks after each planning cycle, checkout and conversion flow analysis monthly, and AI system output reviews weekly for customer-facing systems or monthly for internal tools.

What tools can detect product drift?

Tools for detecting product drift include CSS Stats for finding colour and typography inconsistencies, design system linters for enforcing token usage, schema validation tools for data consistency, component usage analytics for tracking design system adoption, automated testing for UI consistency, and monitoring dashboards for tracking metrics like unique colour values, component proliferation, and pattern adherence over time.

Sources and Further Reading

Expedia Case Study:

- Case Study: How One Simple Change Increased Expedia’s Revenue By $12 Million A Year

- The $12 Million Optional Form Field

Airbnb Design Language System:

- Building a Visual Language: Behind the scenes of our Airbnb design system by Karri Saarinen

- Building (and Re-Building) the Airbnb Design System – React Conf 2019

Form Fields and Conversion Research:

- How Does Form Length Affect Your Conversion Rate – Covers HubSpot, Unbounce, Marketing Experiments, and Eloqua studies

- 5 Studies on How Form Length Impacts Conversion Rates

Spotify Design Systems: